OpenDream Claims to be an AI Art Platform. But Its Users Generated Child Sexual Abuse Material

WARNING: This article discusses child sexual abuse material (CSAM).

At first glance, OpenDream is just one of many generic AI image generation sites that have sprung up in recent years, allowing people to create images by typing short descriptions of what they want the results to look like.

The platform describes itself as an “AI art generator” on its website and social media platforms, and invites users to “unlock a world of limitless creative possibilities”.

But for months, people generated child sexual abuse material (CSAM) with it – and the images were left visible on the platform’s public pages without any apparent moderation.

Bellingcat first came across OpenDream through promotional posts on X (formerly Twitter) in July. Many of the accounts promoting OpenDream focused on AI tools and services.

Some of the posts about OpenDream on X featured a screenshot of the platform’s public gallery with images of young girls next to adult women in bikinis.

The site’s main page described the platform as an “AI Art Generator”, with a fairly innocuous animation-style image. However, the public gallery page – which did not require any login to view – was full of sexually explicit images.

Within seconds of scrolling, we came across multiple AI-generated images of young children and infants in underwear or swimwear, or being sexually abused. The prompts used to generate this imagery – which were also publicly visible – clearly demonstrated an intent to create sexualised images of children. For example, some included the word “toddler” in combination with particular body parts and poses, descriptions of full or partial nudity, or a sexual act.

Bellingcat has reported the site to the National Center for Missing & Exploited Children (NCMEC) in the US, where this reporter is based.

In addition to CSAM, there appeared to be no restrictions by OpenDream on generating non-consensual deepfakes of celebrities. Scrolling through the gallery, we found bikini-clad images of famous female web streamers, politicians, singers, and actors.

Archived versions of the gallery on Wayback Machine show that OpenDream was displaying AI CSAM and non-consensual deepfakes as far back as December 2023.

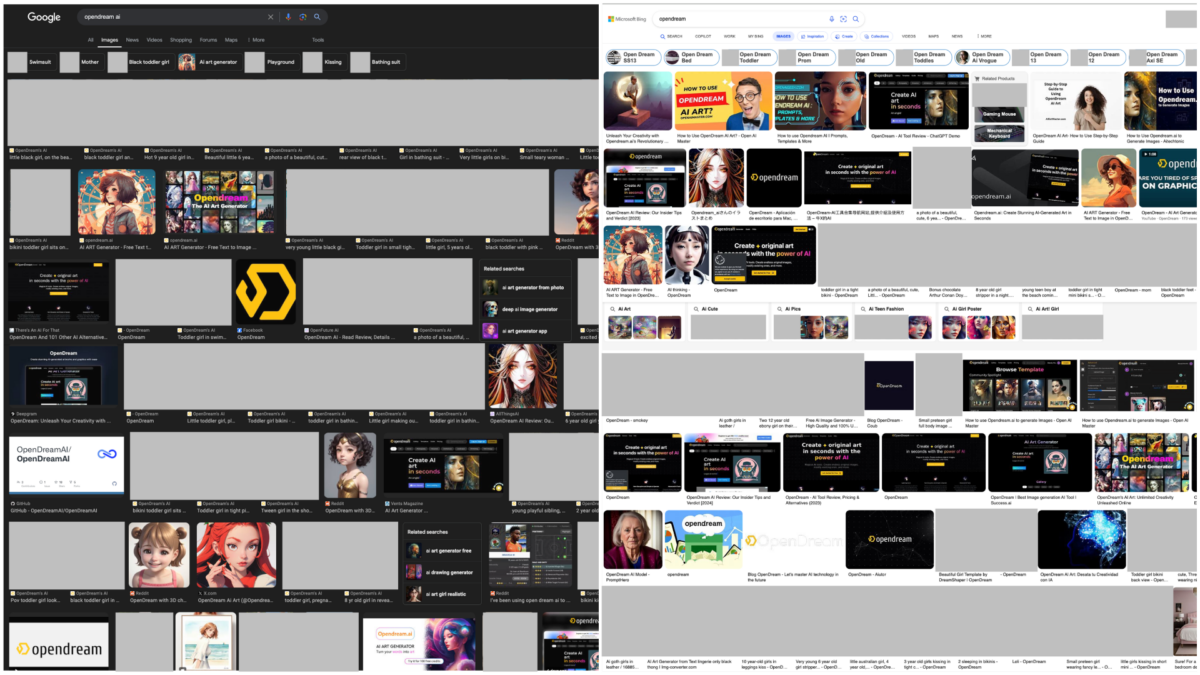

Upon searching for the platform’s name on popular search engines such as Google and Bing, we found that the majority of image results were also CSAM.

While OpenDream is not as widely used as some other AI image generation platforms, and it is not the first case of people using AI to generate CSAM, the extent to which such material was made available to the public without any apparent moderation set it apart from other sites we had come across at Bellingcat.

At the end of July 2024, we observed moderation attempts for the first time on the platform. As of publication, CSAM and deepfake porn images appear to have been either made private or taken down from the site. It is unclear why there was a sudden clean-up after this content was left unmoderated on the website for at least half a year. OpenDream did not respond to our requests for comment.

According to the site’s WHOIS record, the platform’s domain was registered by Namecheap, which sells web domains. Namecheap’s domain registration agreement prohibits using their services for “illegal or improper purposes”, including if the content violates the laws of any local government. The domain registrar did not respond to our requests for comment. OpenDream’s website is hosted by IT services company Cloudflare, which prohibits content that contains, displays, distributes, or encourages the creation of child sexual abuse material. Cloudflare also did not respond to our request for comment.

Monetising ‘Not Safe For Work’ Content

OpenDream generates income from both subscriptions and advertising. While anyone can generate a handful of images each day for free, there are also paid plans.

In July, the two most expensive subscription plans allowed users to employ NSFW (not safe for work) prompts, access OpenDream’s NSFW models and hide the images they generated from the public gallery. NSFW is a general term for content that would usually be considered inappropriate to be viewed at work, such as sexual, violent or disturbing imagery. The only NSFW imagery we observed on OpenDream was of a pornographic or sexual nature.

As of publication, the mentions of public NSFW models have been removed from the pricing information, but the paid plans still include access to NSFW prompts.

Until recently, the payments were powered by financial services company Stripe. But towards the end of July, the Stripe payment screen for OpenDream stopped working. Bellingcat asked Stripe about this, but the company said it could not comment on individual accounts.

Stripe’s policy prohibits pornographic content, including CSAM and non-consensual deepfakes, and it has previously taken down payment screens for other AI sites featuring pornographic content.

Archived versions of the site from the Wayback Machine show that OpenDream was first launched as a free beta product in early 2023. A pricing page first showed up sometime in July 2023, but did not include any mention of NSFW prompts or models.

However, OpenDream had introduced plans that specifically allowed NSFW prompts and usage of NSFW models by December 2023, which was also around the time when obvious attempts to generate CSAM and non-consensual deepfake porn began appearing in archived versions of the gallery page.

Over the course of our investigation, we also came across Google AdSense advertisements on the site.

One of the individuals connected to OpenDream, Nguyen Viet Hai, appeared to share details of the platform’s earnings from ads and subscriptions in four posts on AI-related Facebook groups in October 2023 and April 2024.

In these posts, the account under Nguyen’s name said he was looking to sell OpenDream as the platform had run into financial problems. They also revealed that OpenDream made about US$2,000-3,000 a month, including about US$800-1,000 in monthly ad revenue.

The posts from October 2023 said that OpenDream had 310 users with paid plans. A post from April 2024 – with a screenshot of a Google AdSense dashboard for OpenDream’s domain – showed that from January 1 to March 31, 2024, the platform earned US$2,404.79 (or 59,282,192 Vietnamese Dong) from Google’s programmatic advertising.

Google’s AdSense’s policy prohibits sexually explicit content, including non-consensual sexual themes such as CSAM, whether simulated or real. In response to Bellingcat’s queries, Google said in September that OpenDream’s AdSense account had been terminated in accordance with its policies.

Nguyen is registered as a director of CBM Media Pte Ltd in Singapore, a company that was named as OpenDream’s owner in multiple profiles and blog posts under the platform’s name.

The posts about selling OpenDream mentioned that the company does not have to pay taxes on its earnings for the next three years, and has Stripe payments set up.

Singapore grants newly incorporated companies tax exemptions for their first three years of operation. Based on documents Bellingcat obtained from Singapore’s official business registry, which matched the address and tax code details shared in some of the profiles and blog posts, CBM Media Pte Ltd was registered in the country on June 13, 2023.

In the Facebook posts, Nguyen quoted a price of US$30,000 for the sale in OpenDream in October 2023, but raised this to US$50,000 (or 1.23 billion Vietnamese Dong) in April 2024. It is unclear if any sale has occurred.

Not a Victimless Crime

Globally, laws criminalising AI-generated CSAM have lagged behind the growth of NSFW AI image generation websites.

In both the US and the UK, AI-generated CSAM is illegal and is treated the same as real-life CSAM. In August this year, California filed a ‘first-of-its kind’ lawsuit against some of the world’s largest websites that generate non-consensual deepfake pornography, including CSAM, marking a distinct shift in legal consequence to the companies that offer the AI services instead of the users who create the content. The EU is also discussing new laws to criminalise AI-generated CSAM and deepfakes. But in other countries, like Japan, there are no laws against sharing synthetic sexual content depicting minors as long as they do not involve real children.

While OpenDream was one of the most egregious examples of an AI image generation site featuring CSAM we have come across, it was far from the only one that has shown up in the past year. Bellingcat previously covered other AI platforms earning money through non-consensual deepfakes, including those featuring images of very young-looking subjects.

Dan Sexton, chief technology officer at the Internet Watch Foundation (IWF), argued against the idea that AI CSAM is a victimless crime, stating that it would be “massively detrimental” to the mission of creating an internet free of child sexual abuse to say that generated CSAM was in any way acceptable.

“The reality is that there’s a good chance that you may have had been using a model that has itself been trained on sexual imagery of children, so the abuse of children may well have been involved in the creation of that AI child sexual abuse imagery,” Sexton said.

Sexton added that even if the children depicted are not “real”, exposure to imagery of children being sexually abused could normalise that behaviour.

“You have the rationalisation of those consuming [AI CSAM] that ‘this is not a real child’. But even if it turns out it was a real child, you could rationalise it by saying it’s not real. So that I think is a dangerous route to go down,” he said.

The issue of AI-generated CSAM has grown alongside the issue of deepfake non-consensual explicit material of adults, as many of the AI generation services that people use to generate pornographic images of adult women can also be used to generate pornographic images of children.

According to IWF’s July 2024 report, most AI CSAM is now realistic enough to be treated as “real” CSAM and as AI technology improves, it will become an even more difficult problem to combat.

In the case of OpenDream, this material was made available not just through the website itself, but was also indexed by Google and Bing’s search engines.

Searching for “Opendream” and “Opendream ai” images resulted in both search engines returning synthetic sexualised images of minors. The majority of the images returned included images of bikini-clad or naked toddlers.

When asked about OpenDream’s AI CSAM images appearing on Bing’s search results, a spokesperson for Microsoft stated that the company has a “long-standing commitment to advancing child safety and tackling online child sexual abuse and exploitation”.

“We have taken action to remove this content and remain committed to strengthening our defences and safeguarding our services,” the spokesperson added.

Bellingcat confirmed that these images were removed from Bing’s image search results after we reached out for comment.

Similarly, a spokesperson for Google stated that the search engine has “strong protections to limit the reach of abhorrent content that depicts CSAM or exploits minors, and these systems are effective against synthetic CSAM imagery as well”.

“We proactively detect, remove, and report such content in Search, and have additional protections in place to filter and demote content that sexualises minors,” the Google spokesperson said.

Google also removed the CSAM images generated by OpenDream from its search engine results after Bellingcat reached out for comment.

OpenDream, Closed Doors

While an individual named Nguyen Viet Hai was on the Singapore business registry for CBM Media Pte Ltd – the same person who had openly discussed selling OpenDream on social media – Bellingcat’s investigation suggested others were linked to the operation too.

We noticed that several individuals who were using social media accounts that bore OpenDream’s name had stated they worked for a company called CBM or CBM Media. It is unclear if this refers to CBM Media Pte Ltd., as Bellingcat also found another company called CBM Media Company Limited registered in the Vietnamese capital Hanoi by a man named Bui Duc Quan.

However, online evidence suggested the owners of the two companies with “CBM Media” in their names know each other.

Bui, the registered owner of the Vietnam CBM Media, also previously discussed owning a site named CasinoMentor.

This gambling review site shared a series of Instagram Stories, saved under a highlight called “CBM Media”, which appeared to show a group of people holidaying on Vietnam’s Ly Son Island in May 2022.

Both Nguyen and Bui could be seen among photos, with Bellingcat matching their faces to images shared on their Facebook accounts.

Several other staff members are connected with both CBM Media and OpenDream, based on their public profiles.

For example, a “content creator” which OpenDream’s blog lists as part of its team is now working for CasinoMentor as well as another site called BetMentor, according to their LinkedIn profile.

BetMentor lists CasinoMentor as its partner on its website. CasinoMentor’s profile on the Gambling Portal Webmasters Association (GPWA), an online gambling community, also claims ownership of BetMentor.

We found another LinkedIn profile of someone who listed their current position as a product manager of OpenDream, with past experience as an AI programmer for “CBM”.

We also found a CasinoMentor employee whose name is the same as an admin of an OpenDream Facebook group. Another Facebook profile that links to social media accounts under this name – but using a different profile name – lists their current employer as OpenDream.

Few, if any, CasinoMentor and BetMentor employees had genuine pictures on their work profiles. Many profiles for BetMentor employees seemed to use images generated by OpenDream.

One LinkedIn profile matching the details of a CasinoMentor employee used the image of a woman who had been murdered in the UK in 2020. The profile was created over a year after the woman’s murder, in 2021.

CasinoMentor’s address, meanwhile, is in the Mediterranean state of Malta according to its website and Facebook page.

On CasinoMentor’s Google Maps listing, the account owner uploaded several photos of an office space, including one where the site’s logo is shown on a frosted glass wall. Through a reverse image search, we found an identical image – except without the logo – on a commercial property rental listing for the co-working space in Malta where the address is located.

All other photos showing the office space on CasinoMentor’s Google Maps listing appeared to be from the same source as this rental listing, with CasinoMentor’s logo and other information added to them.

However, CasinoMentor did upload one photo on Google Maps that showed a group of people including Bui. We geolocated this photo to a shopping plaza in Vietnam and found that the filename of the photo was “CMTeam”.

Whether CasinoMentor staff are based in Vietnam or Malta is, therefore, unclear. Neither CasinoMentor nor BetMentor responded to our requests for comment.

Separately, Bellingcat also found a post by a company called VPNChecked describing OpenDream as “our product” on X.

Interestingly, a Reddit account with the username “vpnchecked” had been actively moderating the OpenDream subreddit as recently as June.

Contact details on the VPNChecked website provided another intriguing clue. An archived version of this website from 2021 listed a phone number under the contact details. We searched for this phone number on Skype, revealing the identity of another individual.

The same Skype username was also used to set up a Facebook profile found by Bellingcat. Although the profile names were slightly different, the Skype profile picture matched a previous profile picture on the Facebook account.

Bellingcat is not naming this person as their name does not appear on any documents relating to ownership of either CBM Media Company Limited or CBM Media Pte Ltd.

However, the Facebook account for this individual included links to VPNChecked. They also appear to be connected to both Bui and Nguyen.

All three are friends on Facebook and had been tagged together in numerous photos. These images showed them in social settings together as well as leaving friendly comments on each others’ posts over the course of many years.

One photo posted by Bui in 2019 showed a group including all three sharing a toast in a restaurant. The caption, as translated by Facebook, reads: “Study together, talk together, love for 14 years has not changed!”

No Answers From OpenDream

Based on multiple social media accounts and blog posts associated with OpenDream, the site is owned by Nguyen’s Singapore-based CBM Media Pte Ltd. Our investigation shows that multiple OpenDream staff, as well as moderators of the platform’s social media pages, are also working for sites apparently owned by Bui’s Vietnam-registered CBM Media Company Limited. In addition, VPNChecked – a company connected to an individual apparently close to Nguyen and Bui – has claimed OpenDream as its product .

But despite all that we have been able to find, it remains unclear to what extent each of the three individuals were aware of users creating content that is illegal in the US, the UK and Canada – OpenDream’s top markets according to Nguyen’s Facebook posts.

We tried to get in contact with Bui, Nguyen, and the individual connected to VPNChecked multiple times over the course of this investigation. We reached out via various personal and professional emails found on websites associated with them. We also made multiple phone calls to numbers they listed on their profiles and sent messages to their personal Facebook accounts. At the time of publication we had not received any response.

OpenDream’s AI image generation tool uses open-source models freely available on AI communities such as Hugging Face. The descriptions of some of these models specifically mention NSFW capabilities and tendencies.

Dan Sexton, from IWF, told Bellingcat it was important to consider the whole ecosystem of online CSAM, including free, open-source AI tools and models that have made it easier to create and make money off AI image generation apps.

“These apps didn’t appear from nowhere. How many steps did it take to create one of these services? Because actually there are lots of points of intervention to make it harder,” Sexton said.

In this regard, Sexton said laws and regulation could be strengthened to discourage commercial actors from creating such services.

“These are people looking to make money. If they’re not making money doing this, they’re trying to make money doing something else. And if it’s harder to do this, they might not do it,” he said.

“They’re not going to stop trying to make money and not necessarily trying to do it in harmful ways, but at least they might move to something else.”

Since Google terminated its AdSense account, OpenDream can no longer make money off Google ads which, according to Nguyen’s Facebook posts, previously accounted for a significant proportion of the platform’s revenue. Its payment processing service also remains down at the time of publication.

But advertisements from other programmatic advertising providers are still visible on OpenDream’s site, and the platform appears to be exploring other payment processors. Clicking to upgrade to a paid account now redirects users to a virtual testing environment for PayPal, although no actual payments can be made on this page as the testing environment – which any developer can set up – only simulates transactions. PayPal’s policy also prohibits buying or selling sexually oriented content, including specifically those that “involve, or appear to involve, minors”.

On Sept. 11, 2024, about two weeks after Bellingcat first contacted OpenDream as well as the individuals associated with it, the site appeared to have been suspended.

When it came back online a day later, all evidence of AI CSAM and non-consensual deepfake pornography appeared to have been erased from the site, including from individual account and template pages where such images were visible even after they disappeared from the public gallery in July.

The site’s pricing page still features paid subscriptions that offer users the option to generate images privately with NSFW prompts.

But the relaunched site comes with a new disclaimer on every page: “OpenDream is a place where you can create artistic photos. We do not sell photos and are not responsible for user-generated photos.”

Readers in the US can report CSAM content to the NCMEC at www.cybertipline.com, or 1-800-843-5678. Per the US Department of Justice, your report will be forwarded to a law enforcement agency for investigation and action. An international list of hotlines to report or seek support on CSAM-related issues is also available here.

Melissa Zhu contributed research to this piece.

Featured image: Ying-Chieh Lee & Kingston School of Art / Better Images of AI / Who’s Creating the Kawaii Girl? / CC-BY 4.0.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Twitter here and Mastodon here.