Automatically Discover Website Connections Through Tracking Codes

Using SpyOnWeb API

SpyOnWeb.com is a site that is continually crawling the web looking for tracking codes, nameservers, and other pieces of information to help show connections between websites. Their API has numerous pricing tiers starting from free (which is perfect for this blog post) all the way up to making a large number of calls per month.

Head over to: https://api.spyonweb.com/

Sign up for an account, and in the main API dashboard screen there is an access token that you will need for the rest of this blog post:

Note that you should not share your access token with anyone else, and if you accidentally leak it just click the little button near the red arrow shown and it will regenerate your token for you.

Now that we have our SpyOnWeb token, let’s start writing the code for this post.

Prerequisites

You will need a couple of Python libraries for this blog post. To get them use pip to install:

Additionally, if you would like to do the visualization work at the end of the post you will need to download Gephi from here.

Coding It Up

Warm up your coding fingers, fire up your favourite IDE (I use WingIDE, it is awesome) and start a new file called website_connections.py . The full source code can be downloaded from here.

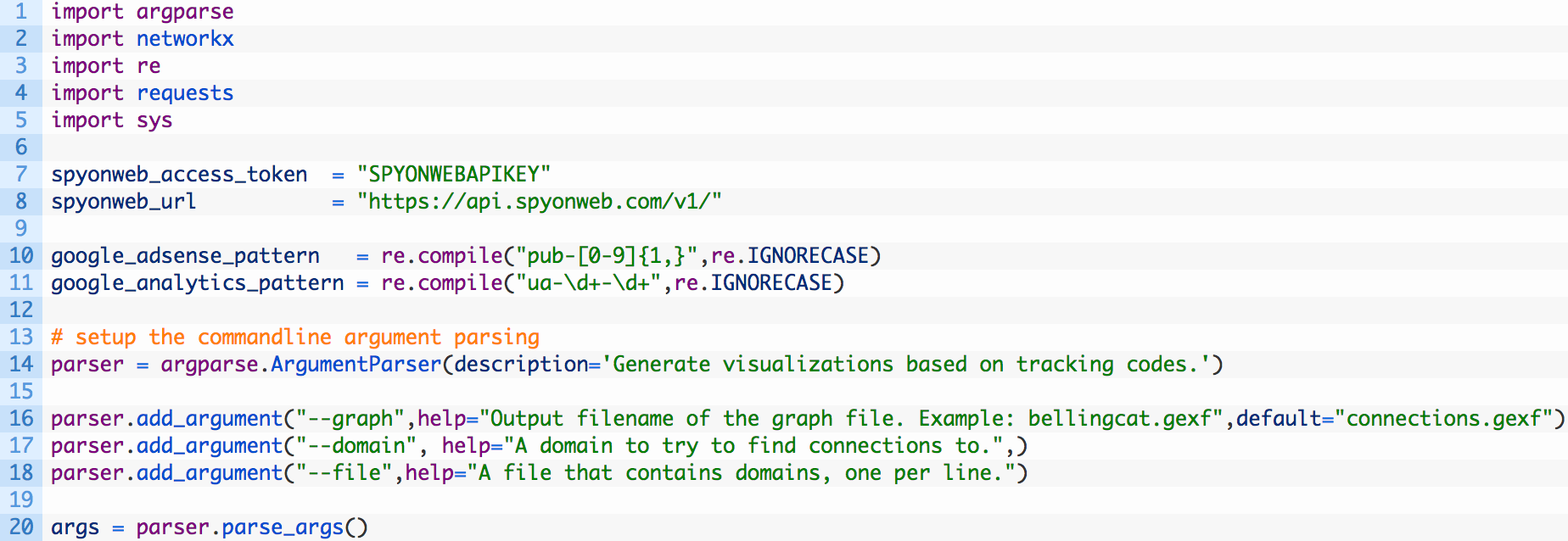

- Lines 1-5: we are importing all of the relevant Python modules that we need to use in our script.

- Lines 7-8: we define a variable spyonweb_access_token where you will paste in your access token from the previous section, and we setup a variable to hold the base URL for the Spyonweb API calls (8).

- Lines 10-11: there are two regular expression patterns setup for Google Adsense and Google Analytics. We will extract these codes from target domains that we provide to the script.

- Lines 13-20: here we are setting up the commandline argument parsing so that we can easily pass in a domain, a file that contains a list of domains and allows us to specify the output file name for our graph.

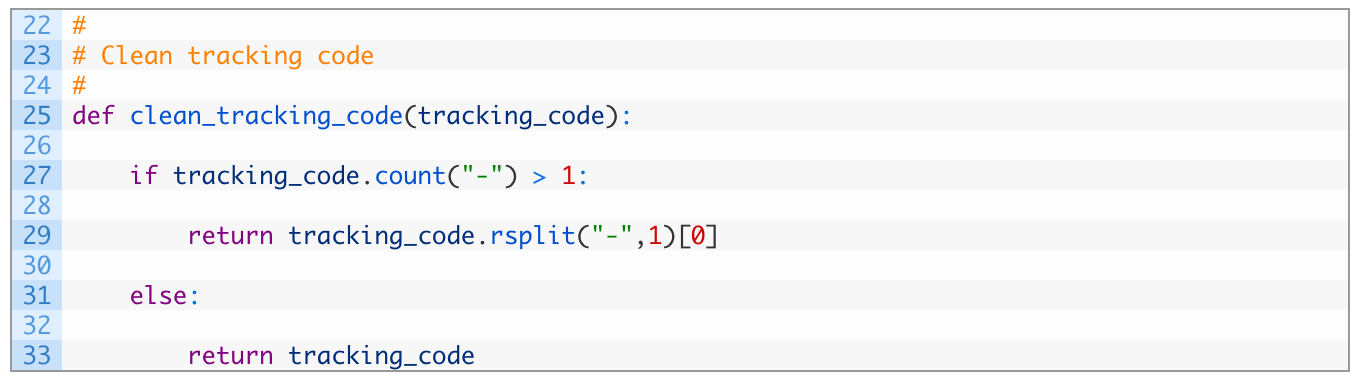

We will add a simple function that will clean up the tracking codes before being graphed, let’s get this out of the way first:

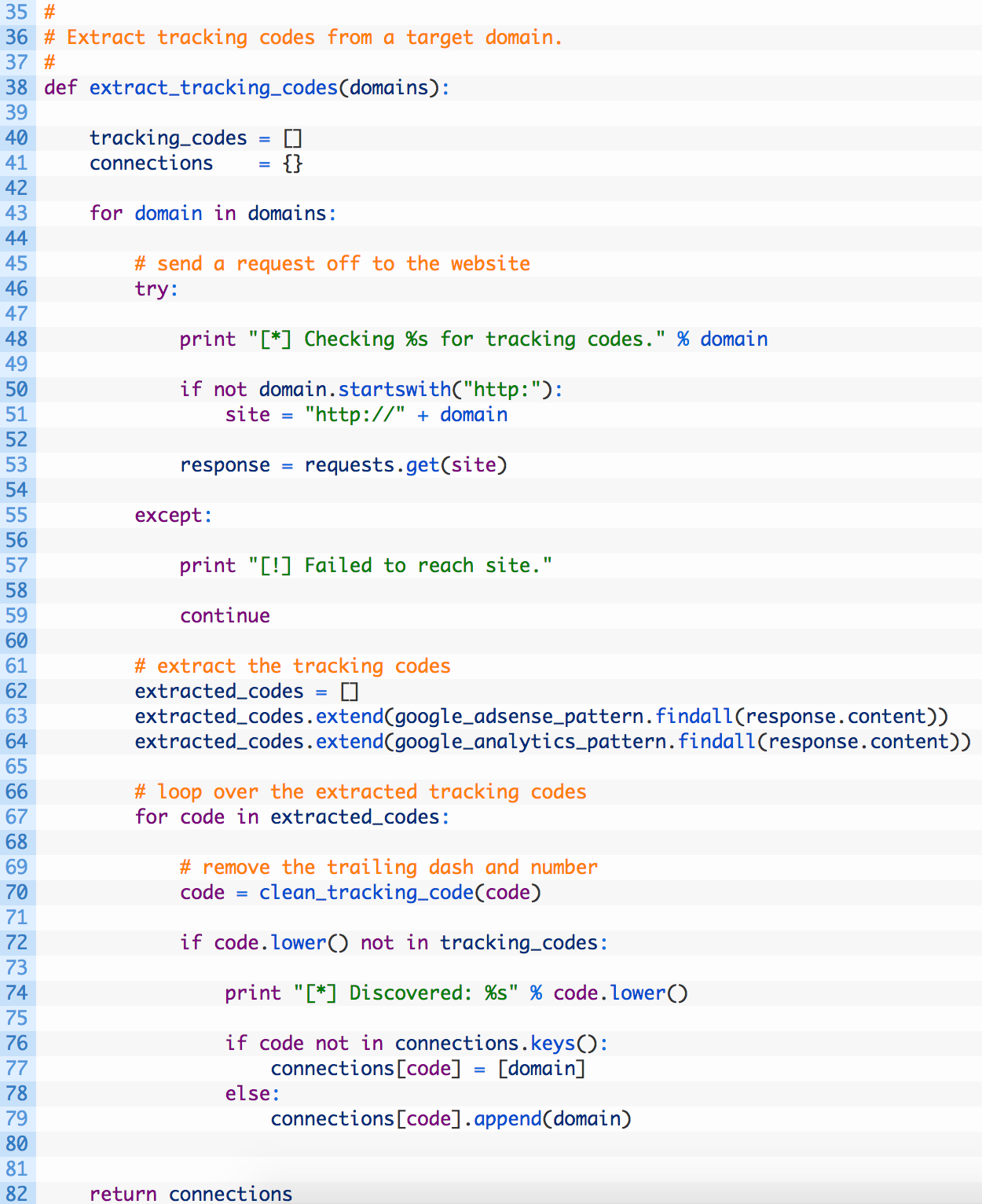

Perfect, now let’s add our first function that will be responsible for extracting tracking codes directly from our target domain(s). Add the following code to your script:

- Line 38: we define our extract_tracking_codes function to accept a list of domains that we will walk over to perform the extraction.

- Lines 43-: we begin walking the list of domains (43) and then we build a proper URL (50-51) before sending off a web request to the target domain (53). If we fail to reach the target site we simply move on to the next domain (57).

- Lines 62-64: if we successfully reached the target site we setup a blank list to hold our extracted codes (62), we attempt to find all of the Google Adsense codes using our regular expression (63) and then do the same for the Google Analytics codes (64).

- Lines 67-79: we walk through the list of extracted codes (67), and then pass it off to clean and normalize the code using our clean_tracking_code function (70). Next we test if we already have this code (72) and if we don’t then we add it to our connections dictionary so that we can track this code against the current target domain.

- Line 82: now we return the connections dictionary so that we can process the results later.

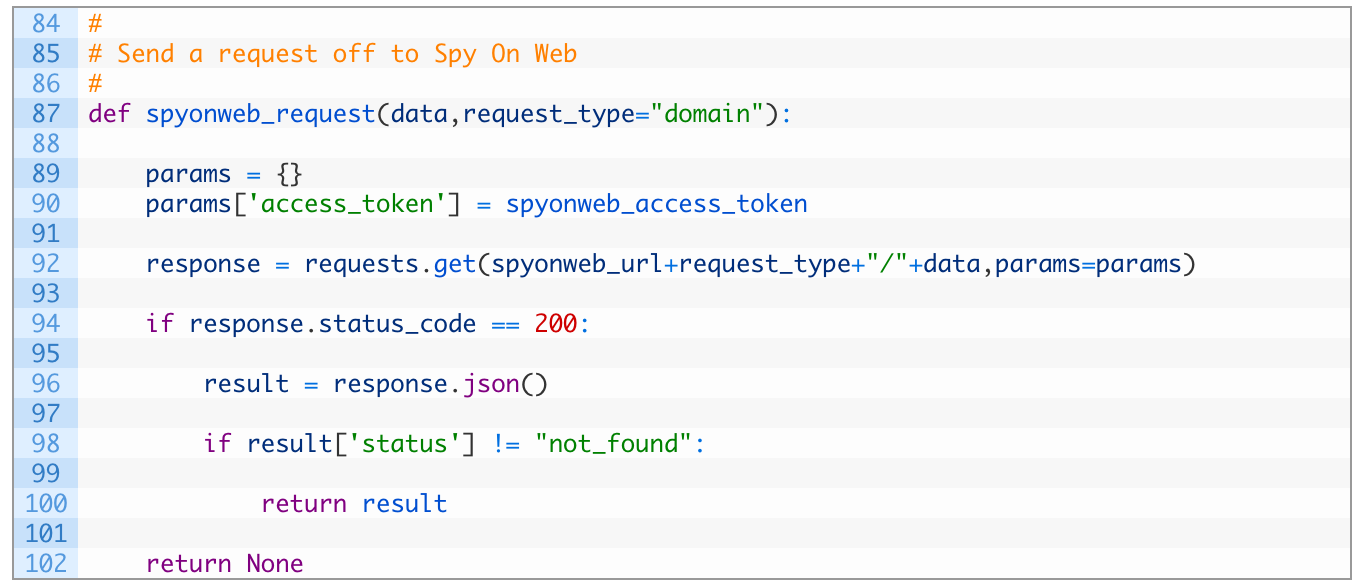

Now let’s setup a function that will handle sending off requests to the Spyonweb API continue to add code where you left off:

- Line 87: we setup our spyonweb_request function to receive data that can be a domain, or a tracking code or other supported input that Spyonweb will take in, we also setup the request_type parameter that will form part of the URL for the Spyonweb request format.

- Lines 89-90: we create a params dictionary that contains an access_token key that contains our Spyonweb API access token. This dictionary will be passed along in the HTTP request to Spyonweb.

- Line 92: we send off the request to Spyonweb using the URL we dynamically build, and passing in our params dictionary.

- Lines 94-102: we check to make sure we received a valid HTTP response back (94), if the request is successful we parse the JSON (96). We then test the dictionary result (98) to see if we received valid results from Spyonweb and if so, we return the entire dictionary. If we don’t get any results we return None (102).

Now we are going to build a function that will deal with sending off specific requests to Spyonweb for our analytics codes. Continue punching in code where you last left off:

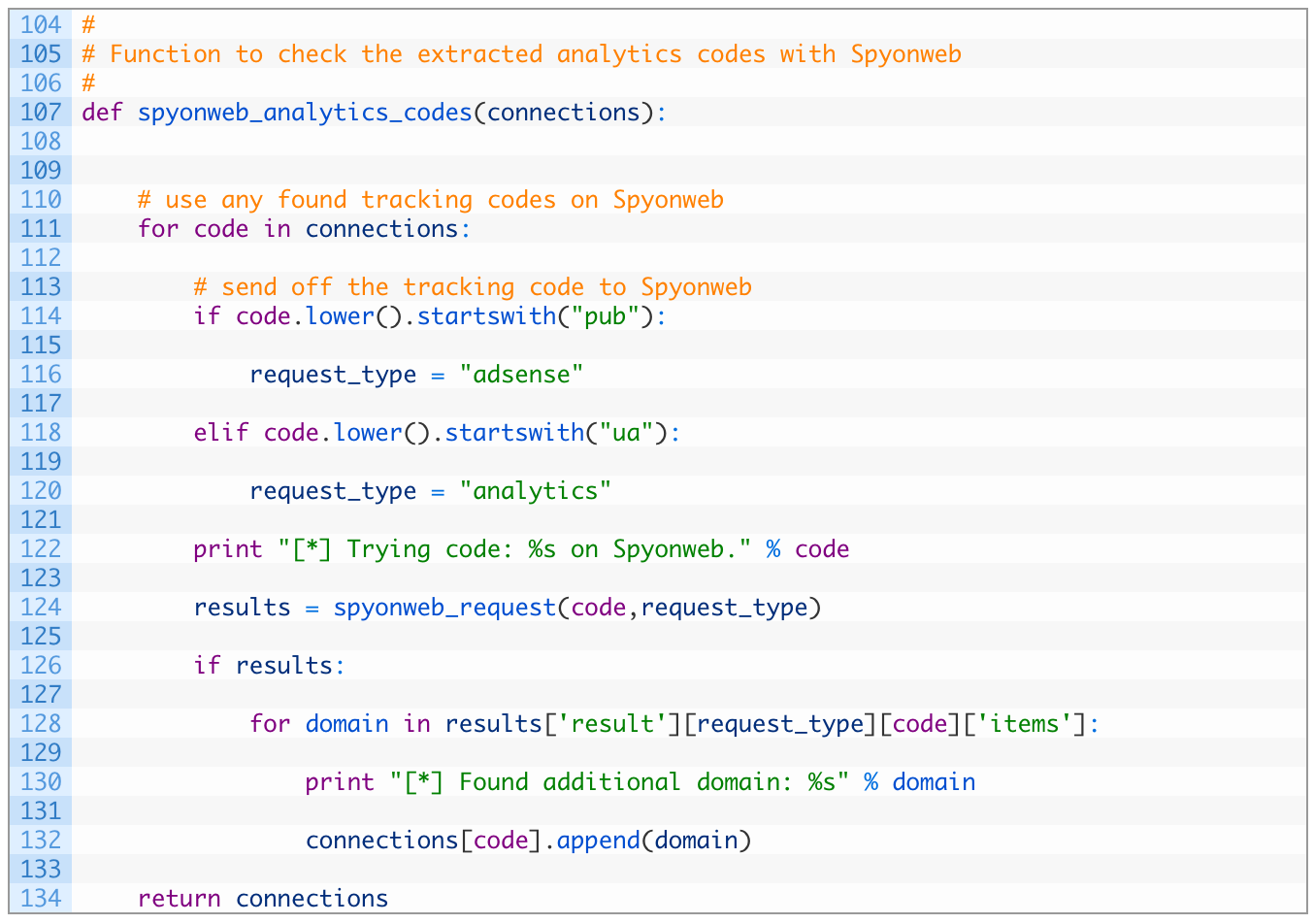

- Line 107: we define the spyonweb_analytics_codes function to take a single parameter connections which is the dictionary of tracking codes and how they are mapped to domains that host them.

- Lines 111-120: we begin looping through the connections keys (which are the tracking codes) (111), and then test to see if it is an Adsense code (114) or whether it is an Analytics code (118). We set the request_type appropriately once we have determined what type of code we are dealing with.

- Line 124: we send off the request to the Spyonweb API to see if there are other domains that can be mapped to the current tracking code.

- Lines 126-134: if we receive valid results back from Spyonweb (126), we loop over the domains (128) and we add it to the list of domains associated to the current tracking code (132). When we are done we return the newly updated connections dictionary (134).

Now we are going to add a function that will allow us to retrieve domain reports from Spyonweb. Domain reports can tell us about additional AdSense or Analytics codes that have been associated to the domain, and potentially additional connections to other domains of interest.

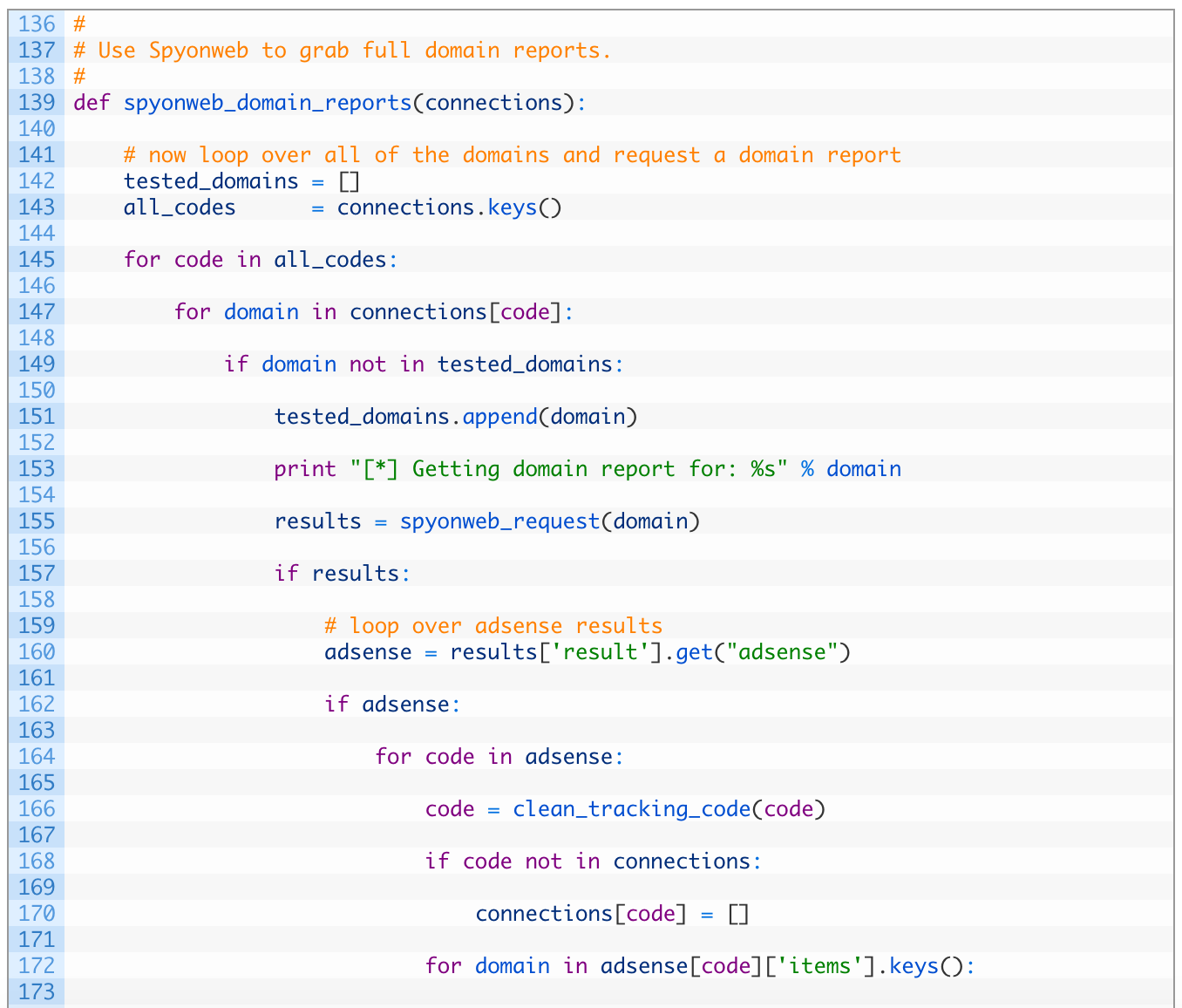

- Line 139: we define our spyonweb_domain_reports function to take in a single connections parameter, which is our main dictionary that we have been passing around up to this point.

- Lines 142-143: we setup an empty list to track which domains we have checked (142) and we load up all of the tracking codes into a list (143).

- Lines 145-155: we loop through all of the codes (145), and then we loop through each domain associated with that code (147). If we haven’t already checked this domain (149), we add the domain to the tested list (151) and then we send off a request to Spyonweb to get a domain report for the current domain (155).

- Lines 157-170: if we receive valid results back from Spyonweb (157) we attempt to check for any Adsense codes (160). If we have results for Adsense (162), we then begin looping over all of the Adsense codes returned (164), we clean it up (166) and if we don’t already have the code in our connections dictionary (168) we add it as a new key (170).

- Lines 172-178: we loop over the domains that are associated to the Adsense code (172), and if we don’t already have the domain tracked (174) we add it to our connections dictionary, associating it to the current tracking code.

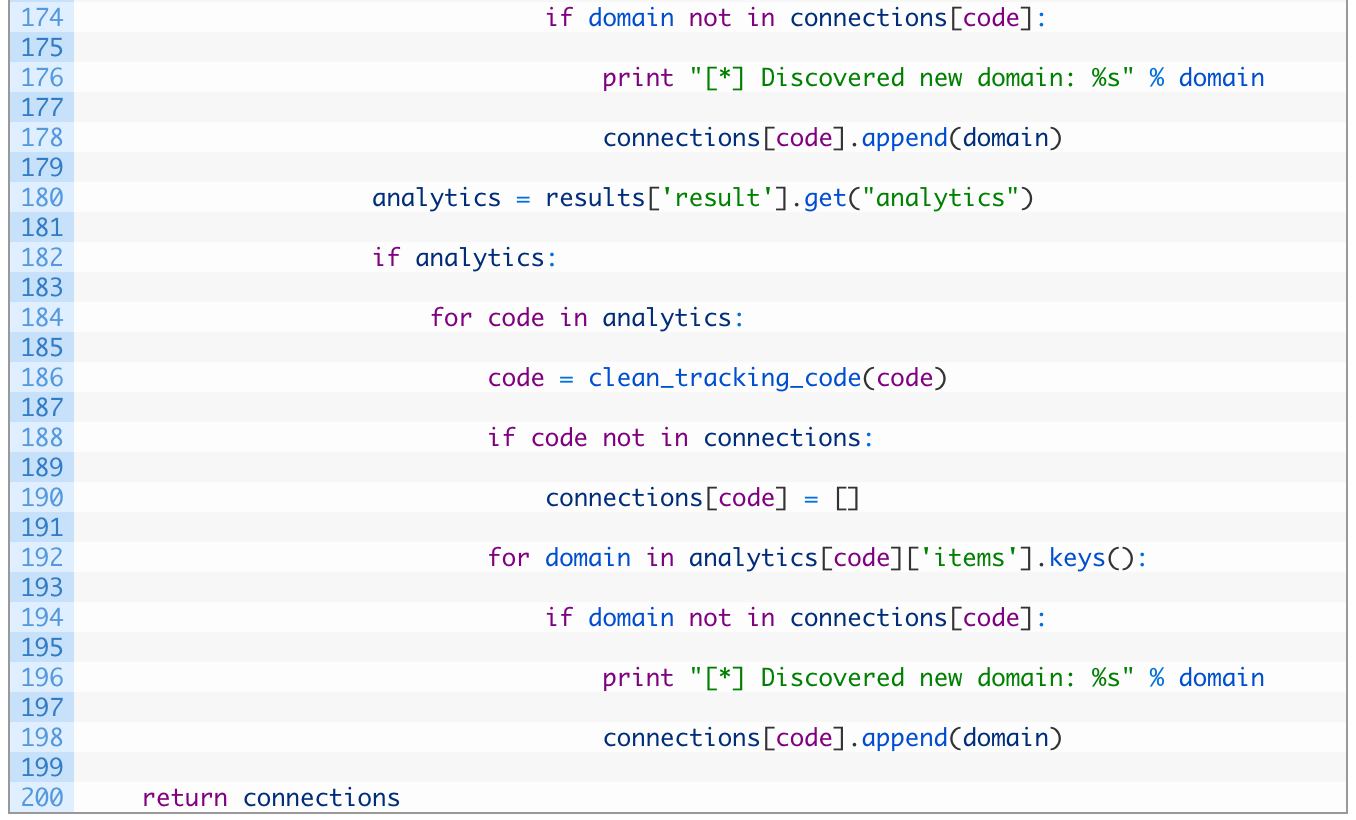

The next block of code is nearly identical to the blocks from 157-170 and 172-178 except we are doing it for the Google Analytics codes. I know the Python purists out there will complain that we are repeating code, but alas, here we are.

Now let’s add our final function that will be responsible for graphing the connections between domains, and tracking codes so that we can open a graph file in Gephi (or other tools) to visualize the results.

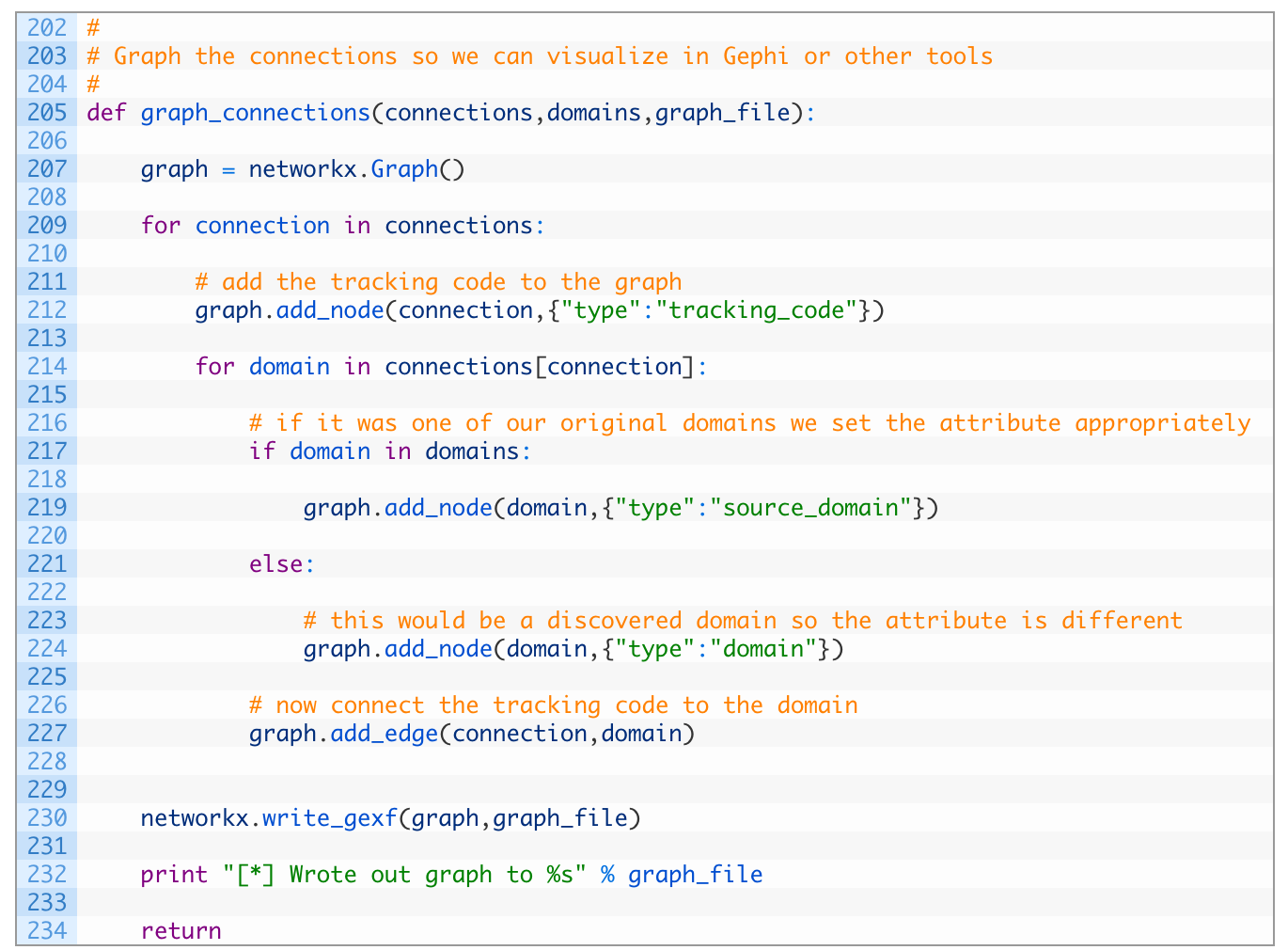

- Line 205: we define the graph_connections function that receives our well groomed connections dictionary, the list of starting domains and the filename we want to output the graph to.

- Line 207: we initialize a new networkx Graph object.

- Lines 209-212: we begin looping over each tracking code key in the connections dictionary (209) and then we add that tracking code as a node in the graph, and we set the option node attributes to have a type which we set to “tracking_code”. This attribute will allow us to color the graph later in Gephi.

- Lines 214-227: we loop over all of the domains that are associated to the current tracking code (214), and we check if the domain is one of our starting domains (217) and if so we add the domain as a node in the graph, setting the type to a “source_domain”. If it is not one of our original starting domains (221) we add the node to the graph just setting it as a normal “domain” (224). The last step is to add an edge (a line) between the tracking code and the domain (227).

- Line 230: now that we have added all tracking codes and domains, and drawn lines between them, we use the write_gexf function to write out the graph to a file.

Now let’s add the final pieces of code that will tie all of our functions together. You are almost done!

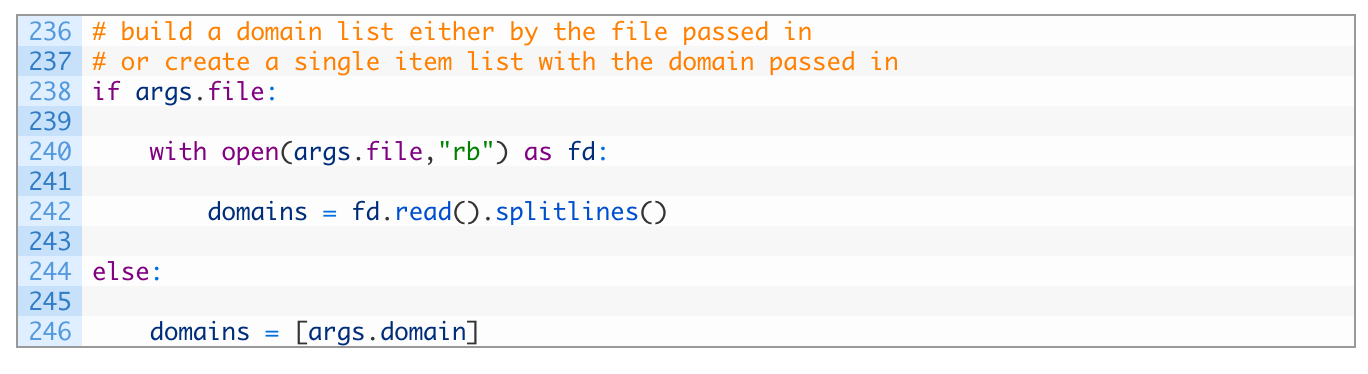

This little chunk of code is pretty straightforward. If we received a file name as a commandline parameter we open the file, and read it in line-by-line. If not then we just take the domain parameter that was passed in to the script.

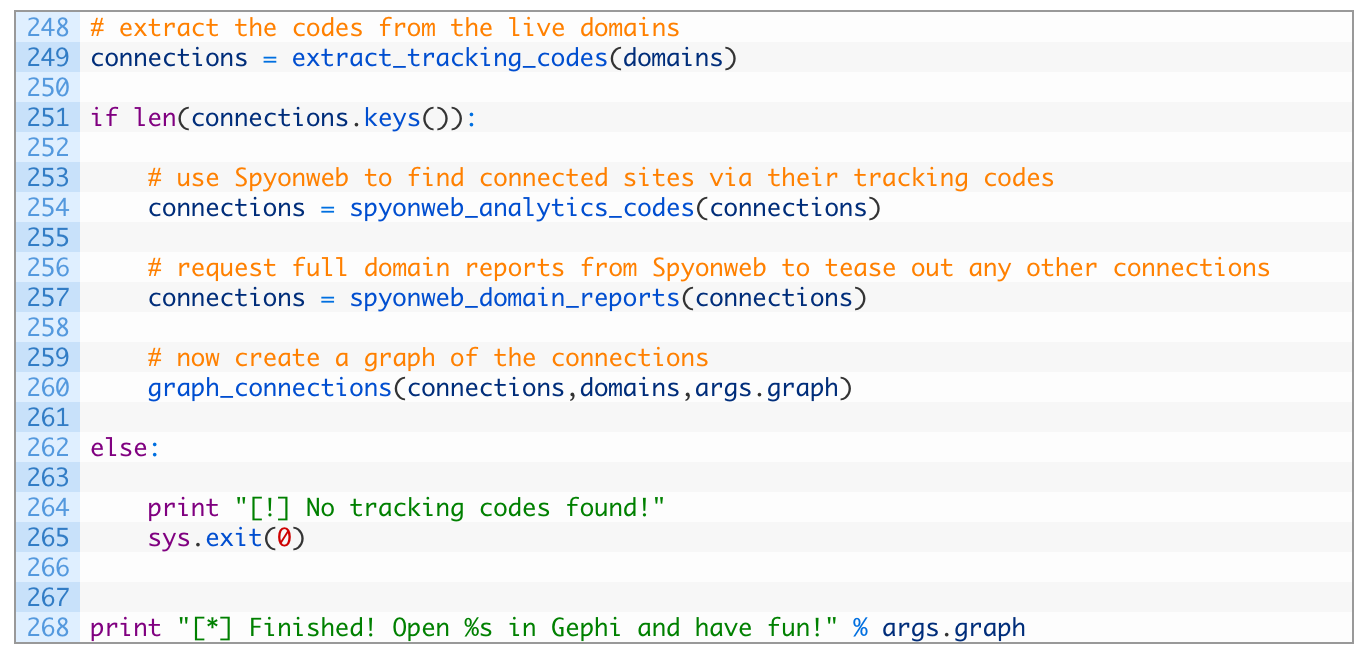

- Line 249: we start by calling the extract_tracking_codes function which will kickstart our now infamous connections dictionary.

- Lines 251-260: if we find some tracking codes (251), we search Spyonweb for the tracking codes (254), and then query Spyonweb for the domain reports (257). The last step is to call our graph_connections function to produce a graph file (260).

And that is that! You are now ready to try testing this script out on some real data!

Let It Rip!

If you run the script with some commandline arguments such as:

[*] Checking southafricabuzz.co.za for tracking codes.

[*] Discovered: pub-8264869885899896

[*] Discovered: pub-8264869885899896

[*] Discovered: ua-101199457-1

[*] Trying code: UA-101199457 on Spyonweb.

[*] Trying code: pub-8264869885899896 on Spyonweb.

[*] Found additional domain: www.indiatravelmantra.com

[*] Found additional domain: www.societyindia.com

…

[*] Discovered new domain: 022office.com

[*] Getting domain report for: indiayatraa.com

[*] Getting domain report for: www.mantraa.com

[*] Wrote out graph to southafrica.gexf

[*] Finished! Open southafrica.gexf in Gephi and have fun!

Now you can open up the southafrica.gexf file in Gephi and examine it for relationships. A brief video is below on how you can do this with ease:

There are a few things you can do for homework to enhance this script. For example, you could query the Wayback machine for tracking codes that a site might have had in the past (see other blog post here), or you could recursively request domain reports from Spyonweb for any new domains that you discover. This could produce a big graph, but has the potential to increase your overall coverage.

Questions? Feedback? Shoot me an email and let me know! justin@automatingosint.com